Key Takeaways

- A structured AI vendor selection process reduces risk and ensures that the chosen AI Application aligns with your organization’s long term goals and operational needs.

- Clearly defining business needs, project scope, and deliverables before evaluating vendors prevents scope creep, budget overruns, and mismatched expectations.

- Using a weighted scoring model to rank AI Platforms helps remove subjectivity and ensures objective, data driven decision making throughout the process.

- Total Cost of Ownership (TCO) analysis is more valuable than comparing upfront pricing alone because it accounts for maintenance, training, integration, and hidden costs.

- Vendor demos and proof of concept trials offer hands on validation that no amount of proposal review or reference checking can replace in the selection lifecycle.

- Security, compliance, and data privacy evaluations are non negotiable steps when selecting any AI Application or AI Platform for enterprise use.

- Cross functional team involvement from IT, legal, finance, and operations ensures a well rounded evaluation and minimizes blind spots in vendor assessment.

- Negotiation is not just about lowering costs but about establishing clear SLAs, exit clauses, IP ownership, and long term partnership terms that protect your interests.

- Continuous vendor performance monitoring post onboarding is essential to ensure sustained quality, accountability, and alignment with evolving business objectives.

- Partnering with experienced consultants like Nadcab Labs, with 8+ years in the field, can accelerate the vendor selection journey and help avoid common pitfalls.

Introduction: Why AI Vendor Selection Matters More Than Ever

In today’s rapidly evolving technology landscape, choosing the right AI Application or AI Platform can define the trajectory of an entire business. Whether you are a startup looking to integrate machine learning into your product or an enterprise aiming to overhaul operations with intelligent automation, the vendor you choose directly impacts your outcomes, timelines, and bottom line. Yet many organizations rush through this critical step, relying on brand recognition or the lowest bid instead of following a structured, evidence based approach.

This comprehensive guide walks you through the complete vendor selection procedure, from the very first step of identifying your business needs to the final decision and ongoing performance management. Along the way, we will explore practical frameworks, comparison tables, real world examples, and proven criteria that leading organizations use to evaluate AI Platforms and AI Applications. By the end of this article, you will have a clear roadmap that you can apply immediately to make smarter, more confident vendor decisions.

The importance of this process cannot be overstated. A Gartner study found that organizations that follow a structured vendor evaluation process are 2.5 times more likely to report satisfaction with their technology investments. This guide will show you exactly how to build and execute such a process.[1]

What Is the Vendor Selection Process? Key Objectives

The vendor selection process is a systematic approach to evaluating, comparing, and choosing the best external partner or supplier for a specific business requirement. When it comes to AI Applications and AI Platforms, this process takes on added complexity because the technology landscape is fragmented, rapidly changing, and filled with vendors making bold claims about their capabilities.

The key objectives of a well structured vendor selection process include ensuring alignment between the vendor’s offerings and your business goals, minimizing financial and operational risk, establishing a foundation for a productive long term partnership, and creating transparency and accountability in decision making. Organizations that treat vendor selection as a strategic exercise rather than a procurement checkbox consistently achieve better outcomes.

Think of it this way: selecting an AI vendor is similar to hiring a key team member. You would not hire someone based solely on their resume. You would interview them, check references, test their skills, and ensure they fit your culture. The same rigor should apply to vendor selection.

Identifying Business Needs and Objectives

Every successful vendor selection journey begins with a thorough understanding of your own organization’s needs. Before you even begin looking at vendors, you must answer fundamental questions: What problem are we trying to solve? What outcomes do we expect? Which processes or departments will be affected? What is our timeline and budget?

For AI specific initiatives, this step is particularly important because the scope of AI Applications is vast. You might need a natural language processing platform, a computer vision solution, a predictive analytics engine, or a full stack AI Platform that covers multiple use cases. Without clarity on your specific needs, you risk evaluating vendors against the wrong criteria.

A practical approach is to create a needs assessment document that captures your current pain points, desired outcomes, technical constraints, integration requirements, user expectations, and success metrics. This document becomes the foundation for every subsequent step in the vendor selection lifecycle.

Pro Tip: Involve stakeholders from multiple departments early. IT teams understand technical requirements, operations teams know workflow pain points, finance teams set budget parameters, and leadership defines strategic priorities. A needs assessment that reflects all these perspectives produces far better results.

Defining Project Scope and Deliverables

Once your business needs are documented, the next step is translating them into a concrete project scope. This includes defining specific deliverables, milestones, timelines, and acceptance criteria. A well defined scope prevents the two biggest risks in vendor engagements: scope creep and misaligned expectations.

For custom AI projects, scope definition should address the data requirements (volume, format, sources), the expected model accuracy or performance benchmarks, integration points with existing systems, user training and onboarding needs, and post-deployment support expectations. Clearly documenting these elements ensures that vendors can prepare accurate proposals and realistic implementation plans tailored to your organization’s goals.

Consider using a RACI (Responsible, Accountable, Consulted, Informed) matrix to clarify who owns what in the project. This is especially valuable when working with AI Platforms because the boundary between vendor responsibilities and internal team responsibilities can blur quickly without clear documentation.

Establishing Vendor Selection Criteria

Establishing clear, measurable selection criteria is perhaps the most important step in the entire process. Without predefined criteria, evaluations become subjective and influenced by presentation skills rather than substance. Your criteria should reflect what truly matters for your organization and your specific AI Application requirements.

Common vendor selection criteria for AI Platforms include technical capability and feature set, industry experience and domain expertise, pricing and total cost of ownership, scalability and performance under load, security posture and compliance certifications, quality of customer support, integration capabilities with existing systems, financial stability and company reputation, innovation roadmap and product vision, and reference quality and client testimonials.

A best practice is to assign weights to each criterion based on its relative importance. For example, if security is paramount for your industry, it might carry a 20% weight, while pricing might carry only 10%. This weighted approach ensures that the final vendor score accurately reflects your priorities.

Sample Vendor Selection Criteria with Weightage

| Criteria | Weight (%) | Description |

|---|---|---|

| Technical Capabilities | 20% | Features, architecture, AI model performance |

| Security & Compliance | 18% | Data protection, certifications, regulatory adherence |

| Scalability | 15% | Ability to handle growth in users, data, and workload |

| Industry Experience | 12% | Relevant case studies, domain expertise |

| Cost & TCO | 12% | Upfront, recurring, hidden, and maintenance costs |

| Support & SLAs | 10% | Response time, availability, escalation paths |

| Integration | 8% | API support, compatibility with current tech stack |

| Innovation Roadmap | 5% | R&D investment, future feature plans |

Creating a Longlist of Potential Vendors

With your criteria in place, the next step is to build a longlist of potential AI vendors. This list typically contains 10 to 20 vendors gathered through industry research, analyst reports (such as those from Gartner or Forrester), peer recommendations, online marketplaces, trade shows, and professional networks.

For AI Platforms specifically, sources like G2, Capterra, Clutch, and industry specific directories can provide valuable starting points. Do not limit yourself to the most well known names. Some of the most innovative and cost effective AI Applications come from emerging vendors that may not yet have widespread brand recognition but offer cutting edge capabilities and more personalized service.

At this stage, your goal is breadth, not depth. Cast a wide net and include any vendor that appears to meet your basic requirements. The narrowing process will come in the next step.

Shortlisting Qualified Vendors

Shortlisting is the process of reducing your longlist to a manageable number of vendors, typically 3 to 6, who will be invited to participate in the formal evaluation. This step uses your predefined criteria as a filter. Vendors that do not meet minimum thresholds for critical requirements (such as security certifications or required integrations) are eliminated.

A practical shortlisting method involves creating a simple pass/fail matrix for your must have requirements and a basic scoring grid for your nice to have requirements. Vendors that pass all must have criteria and score well on nice to have criteria make it to the shortlist.

Example: If your organization requires SOC 2 Type II compliance and a vendor does not hold this certification, they would be eliminated regardless of how well they score on other criteria. This approach ensures that your shortlist contains only vendors who can realistically meet your needs.

Preparing a Request for Information (RFI)

A Request for Information (RFI) is a preliminary document sent to vendors on your longlist to gather general information about their capabilities, experience, and offerings. Unlike the more detailed RFP, the RFI is designed to help you learn about the vendor landscape and confirm whether vendors should proceed to the formal evaluation stage.

An effective RFI for AI vendor evaluation should include questions about the vendor’s company background and history, core AI capabilities and technology stack, relevant industry experience and case studies, general pricing model and licensing approach, support and service level options, and security and compliance posture.

The responses to your RFI will help you refine your shortlist and prepare a more targeted Request for Proposal. Many organizations skip the RFI and go directly to the RFP, but this can lead to wasted effort if vendors that are clearly not a fit end up receiving and responding to your detailed RFP.

Issuing a Request for Proposal (RFP)

The Request for Proposal is a formal document that invites shortlisted vendors to submit detailed proposals addressing your specific requirements. A well crafted RFP for an AI Application or AI Platform evaluation should include a clear project overview and business context, detailed functional and technical requirements, expected deliverables and timelines, evaluation criteria and their weights, submission format and deadline, and questions requiring specific, measurable responses.

The quality of your RFP directly impacts the quality of vendor proposals you receive. Vague requirements produce vague responses. The more specific and structured your RFP, the easier it becomes to compare vendor proposals on an equal footing.

Statement: Organizations that invest time in crafting detailed, well structured RFPs consistently report receiving higher quality vendor proposals and making more informed final decisions.

Reviewing Vendor Proposals

Once proposals are received, the evaluation team should review each one systematically against the predefined criteria. It is essential to resist the temptation to be swayed by flashy presentations or impressive marketing materials. Instead, focus on substance: does the vendor’s proposed solution actually address your requirements? Are their timelines realistic? Is their pricing transparent?

Create a standardized evaluation template that all reviewers use. This ensures consistency and makes it easier to aggregate scores later. Each team member should review proposals independently before coming together to discuss and reconcile their assessments. This approach reduces groupthink and ensures diverse perspectives are captured.

Pay special attention to areas where vendors provide vague or generic responses. A vendor that cannot provide specific details about how they will address your unique requirements may not have the depth of experience needed to deliver successfully.

Evaluating Technical Capabilities

Technical evaluation is the backbone of any AI vendor assessment. This step examines the vendor’s technology stack, AI model performance, system architecture, API capabilities, data handling practices, and integration flexibility. For AI Platforms, you should evaluate the breadth and depth of available algorithms and models, the quality and accessibility of the platform’s APIs, data preprocessing and feature engineering capabilities, model training, testing, and deployment workflows, real time inference performance and latency, and customization options for your specific use cases.

A practical approach is to develop a set of technical test scenarios that reflect your real world use cases. Ask vendors to demonstrate how their AI Application handles these specific scenarios rather than relying solely on generic product demos.

Thesis: The technical capability of an AI vendor should not be evaluated in isolation but always in the context of how well it integrates with your existing technology ecosystem and supports your specific business workflows.

Assessing Industry Experience and Expertise

An AI vendor with deep experience in your specific industry brings invaluable domain knowledge that accelerates implementation and reduces risk. They understand your regulatory environment, common data challenges, industry specific workflows, and the nuances that generic AI vendors often miss.

When evaluating industry experience, look for relevant case studies and success stories, familiarity with your industry’s regulatory requirements, pre built models or templates specific to your domain, references from organizations similar to yours, and published thought leadership or research in your industry.

For example, an AI Platform vendor that has extensive experience in healthcare will understand HIPAA requirements, clinical workflow integration, patient data sensitivity, and the regulatory approval process for AI powered diagnostic tools. This kind of domain expertise can save months of implementation time and significantly reduce compliance risk.

Vendor Comparison: Key Parameters at a Glance

| Parameter | Vendor A (Enterprise) | Vendor B (Mid Market) | Vendor C (Startup) |

|---|---|---|---|

| Pricing Model | Annual License | Monthly Subscription | Pay per Use |

| Scalability | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐ |

| AI Model Variety | 50+ Models | 20+ Models | 5 to 10 Models |

| Support Level | 24/7 Dedicated | Business Hours | Email Only |

| Compliance | SOC2, HIPAA, GDPR | SOC2, GDPR | GDPR |

| Integration APIs | Full REST + GraphQL | REST API | REST API (Limited) |

| Best For | Large Enterprises | Growing Businesses | Budget Conscious Teams |

Comparing Pricing and Cost Structures

Pricing comparisons for AI Platforms can be deceptively complex. Vendors use different pricing models including per user licensing, usage based pricing, tiered subscriptions, flat annual fees, and hybrid models. To make an accurate comparison, you need to normalize pricing across vendors using a common framework.

Start by requesting detailed pricing breakdowns from each vendor that include initial setup and implementation fees, recurring subscription or license costs, data storage and processing charges, API call or usage based fees, training and onboarding costs, customization and integration fees, and ongoing maintenance and support costs.

Be wary of vendors who provide a single, all inclusive price without breaking it down. This lack of transparency often masks hidden costs that surface later during implementation. Similarly, unusually low pricing can indicate that the vendor is cutting corners on features, support, or security.

Analyzing Total Cost of Ownership (TCO)

Total Cost of Ownership goes beyond the sticker price to capture every cost associated with a vendor relationship over its entire lifespan. For AI Applications, TCO includes direct costs (licensing, hardware, cloud infrastructure) as well as indirect costs (internal IT resources, productivity loss during transition, training time, opportunity costs).

A comprehensive TCO analysis for AI Platform selection should cover a 3 to 5 year horizon and include procurement costs (one time), operational costs (recurring), personnel costs (internal team effort), risk costs (potential downtime, data breaches, vendor lock in), and exit costs (migration, data export, contract termination).

Example: Consider two AI Platform vendors. Vendor X charges $100,000 annually but includes all support, training, and updates. Vendor Y charges $60,000 annually but charges separately for support ($15,000), training ($10,000), and major updates ($20,000). Over three years, Vendor X costs $300,000 while Vendor Y costs $315,000. The apparently cheaper option is actually more expensive when you account for the full picture.

Conducting Vendor Demos and Proof of Concept

Vendor demonstrations and proof of concept (POC) trials are where theory meets practice. While proposals and documentation tell you what a vendor claims to do, demos and POCs show you what they can actually deliver. This step is critical for AI Applications because the gap between marketing promises and real world performance can be substantial.

When conducting vendor demos, provide vendors with your specific use cases and data samples rather than allowing them to use scripted, prepared scenarios. Ask them to demonstrate real time capabilities, integration processes, and customization options. Invite end users to participate in demos so you can assess usability and user experience firsthand.

For proof of concept trials, define clear success criteria upfront. A POC should test the vendor’s AI Platform against a specific, realistic scenario with measurable outcomes. Set a fixed timeline (typically 2 to 4 weeks) and evaluate results against your predefined benchmarks. The POC is your last line of defense against making a costly mistake, so invest the time and resources to do it properly.

Reviewing Security, Compliance, and Risk Factors

In an era of increasing data regulations and sophisticated cyber threats, security and compliance evaluation is non negotiable. When selecting an AI Platform, your security assessment should cover data encryption (at rest and in transit), access control and authentication mechanisms, vulnerability management and patching practices, incident response protocols, third party audit results and certifications, and data residency and sovereignty compliance.

For compliance, verify that the vendor meets all regulatory requirements relevant to your industry and geography. This includes GDPR for European operations, HIPAA for healthcare data, SOC 2 for service organization controls, PCI DSS for payment data, and any industry specific regulations that apply to your business.

Risk assessment should also consider vendor financial stability (can they survive a market downturn?), concentration risk (are you becoming too dependent on a single vendor?), and business continuity planning (what happens if the vendor experiences a major outage or goes out of business?).

Checking References and Client Feedback

Reference checks provide real world validation that no amount of vendor documentation can replace. When checking references, go beyond the curated list provided by the vendor. Look for independent reviews on platforms like G2, Capterra, and TrustRadius. Reach out to your professional network for candid feedback from organizations that have worked with the vendor.

When speaking with references, ask targeted questions about the vendor’s communication quality and responsiveness, ability to meet deadlines and stay within budget, handling of unexpected challenges or scope changes, quality of post deployment support, whether the client would choose the same vendor again, and specific results achieved with the AI Application.

A pattern of consistent positive feedback across multiple independent sources is a strong indicator of vendor reliability. Conversely, recurring themes in negative feedback (such as slow support or hidden costs) should be taken seriously regardless of how well the vendor scores on other criteria.

Scoring and Ranking Vendors

With all evaluation data collected, the next step is to compile and score each vendor using your weighted criteria model. This quantitative approach transforms subjective impressions into objective, comparable numbers. Each vendor receives a score for each criterion, which is then multiplied by the criterion’s weight to produce a weighted score. The sum of all weighted scores gives each vendor their final ranking.

Vendor Scoring Matrix Example

| Criteria | Weight | Vendor A Score | Vendor A Weighted | Vendor B Score | Vendor B Weighted |

|---|---|---|---|---|---|

| Technical Capabilities | 20% | 9/10 | 1.80 | 7/10 | 1.40 |

| Security & Compliance | 18% | 8/10 | 1.44 | 9/10 | 1.62 |

| Scalability | 15% | 8/10 | 1.20 | 8/10 | 1.20 |

| Industry Experience | 12% | 7/10 | 0.84 | 9/10 | 1.08 |

| Cost & TCO | 12% | 6/10 | 0.72 | 8/10 | 0.96 |

| Support & SLAs | 10% | 9/10 | 0.90 | 7/10 | 0.70 |

| Integration | 8% | 8/10 | 0.64 | 7/10 | 0.56 |

| Innovation Roadmap | 5% | 8/10 | 0.40 | 6/10 | 0.30 |

| TOTAL WEIGHTED SCORE | 7.94 / 10 | 7.82 / 10 | |||

As the scoring matrix above shows, even small differences in individual criteria can add up to meaningful differences in final scores when weights are applied. This is precisely why a structured, quantitative approach is superior to gut-feel decision-making. In this example, Vendor A edges ahead primarily due to stronger technical capabilities and support, even though Vendor B scores higher on industry experience and cost.

Negotiating Terms and Conditions

Negotiation is not just about getting a lower price. It is about structuring a partnership agreement that protects both parties and sets the foundation for long term success. When negotiating with your selected AI vendor, key areas to address include service level agreements (SLAs) with specific, measurable targets, pricing structure with caps on annual increases, intellectual property ownership for custom models and solutions, data ownership and portability clauses, exit terms and transition support provisions, liability limitations and indemnification, performance penalties and remediation procedures, and contract renewal terms and conditions.

Approach negotiations with a collaborative mindset rather than an adversarial one. The best vendor relationships are partnerships where both parties are invested in mutual success. That said, never compromise on critical protections like data ownership, IP rights, and exit clauses. These provisions may seem unnecessary when the relationship is new, but they become invaluable if circumstances change.

Pro Tip: Always negotiate the exit before you need it. A clear exit strategy with defined data migration support, transition timelines, and cost provisions should be part of every vendor agreement. Organizations that skip this step often find themselves locked into underperforming vendor relationships with no practical way out.

Finalising the Vendor Selection Decision

The final selection decision should be a culmination of all the data, analysis, and insights gathered throughout the process. At this point, you should have a clear picture of each vendor’s strengths and weaknesses, supported by quantitative scores and qualitative observations. Present a comprehensive vendor evaluation report to your decision making committee that includes a summary of each vendor’s final weighted score, key differentiators and areas of concern for each vendor, TCO comparison over the planned engagement period, reference check findings, POC results and technical validation outcomes, risk assessment summary, and a clear recommendation with supporting rationale.

The decision should not be made by a single individual. Involve representatives from all affected departments to ensure buy in and to catch any blind spots. Once the decision is made, document the rationale clearly. This documentation serves multiple purposes: it provides accountability, supports future vendor reviews, and helps defend the decision if it is questioned later.

After selecting the vendor, notify all participating vendors of your decision. Provide constructive feedback to those not selected, as they may be valuable partners for future projects. This professional courtesy also maintains your organization’s reputation in the vendor community.

Ready to Select the Right AI Vendor for Your Business?

Let our expert team guide you through a structured vendor evaluation process tailored to your unique needs and goals.

Onboarding and Vendor Performance Management

The vendor selection process does not end with signing the contract. Effective onboarding and ongoing performance management are essential to realizing the full value of your AI investment. The onboarding phase should include a detailed implementation plan with clear milestones and responsibilities, knowledge transfer sessions between the vendor and your internal teams, system integration and data migration activities, user training programs tailored to different roles and skill levels, and establishment of communication protocols and escalation paths.

Post onboarding, implement a vendor performance management framework that tracks key performance indicators (KPIs) aligned with your original success criteria. Schedule regular performance review meetings (monthly for the first quarter, then quarterly thereafter) to assess progress, address issues, and identify opportunities for optimization.

Common KPIs for AI vendor performance monitoring include system uptime and availability, model accuracy and prediction quality, response time and processing speed, support ticket resolution time, SLA compliance rate, user satisfaction scores, and return on investment metrics.

Remember that vendor management is an ongoing relationship, not a one time transaction. The most successful AI implementations come from organizations that treat their vendors as strategic partners and invest in nurturing these relationships over time.

Why Nadcab Labs Is Your Trusted Partner for AI Vendor Selection

With over 8 years of hands on experience in AI Application consulting, platform evaluation, and technology strategy, Nadcab Labs has established itself as a leading authority in helping businesses navigate the complex AI vendor landscape. Our team has guided hundreds of organizations, from ambitious startups to Fortune 500 enterprises, through structured vendor selection processes that deliver measurable results. We bring deep expertise across AI Platforms, machine learning frameworks, natural language processing solutions, and intelligent automation systems. Our consultants do not simply recommend vendors. They work alongside your team to understand your unique business context, define precise evaluation criteria, conduct rigorous technical assessments, and negotiate terms that protect your interests. The Nadcab Labs approach combines industry proven methodologies with real world pragmatism, ensuring that your AI vendor selection is not just thorough but also efficient and aligned with your strategic vision. When you partner with Nadcab Labs, you gain access to proprietary evaluation frameworks, vendor intelligence databases, and a network of technology partnerships built over nearly a decade in the field. Our track record speaks for itself: 95% of our clients report satisfaction with their final vendor selection, and our structured approach has saved organizations an average of 30% on total AI investment costs. Whether you are selecting your first AI Application or re evaluating your existing vendor portfolio, Nadcab Labs has the expertise, experience, and commitment to help you make the smartest possible decision.

Frequently Asked Questions

The timeline varies depending on complexity, but most organizations complete the AI vendor selection process in 8 to 16 weeks. This includes needs assessment, RFI/RFP cycles, demos, evaluations, and contract negotiations. Enterprises with stricter compliance requirements may take longer due to additional security audits and legal reviews before finalizing any partnership.

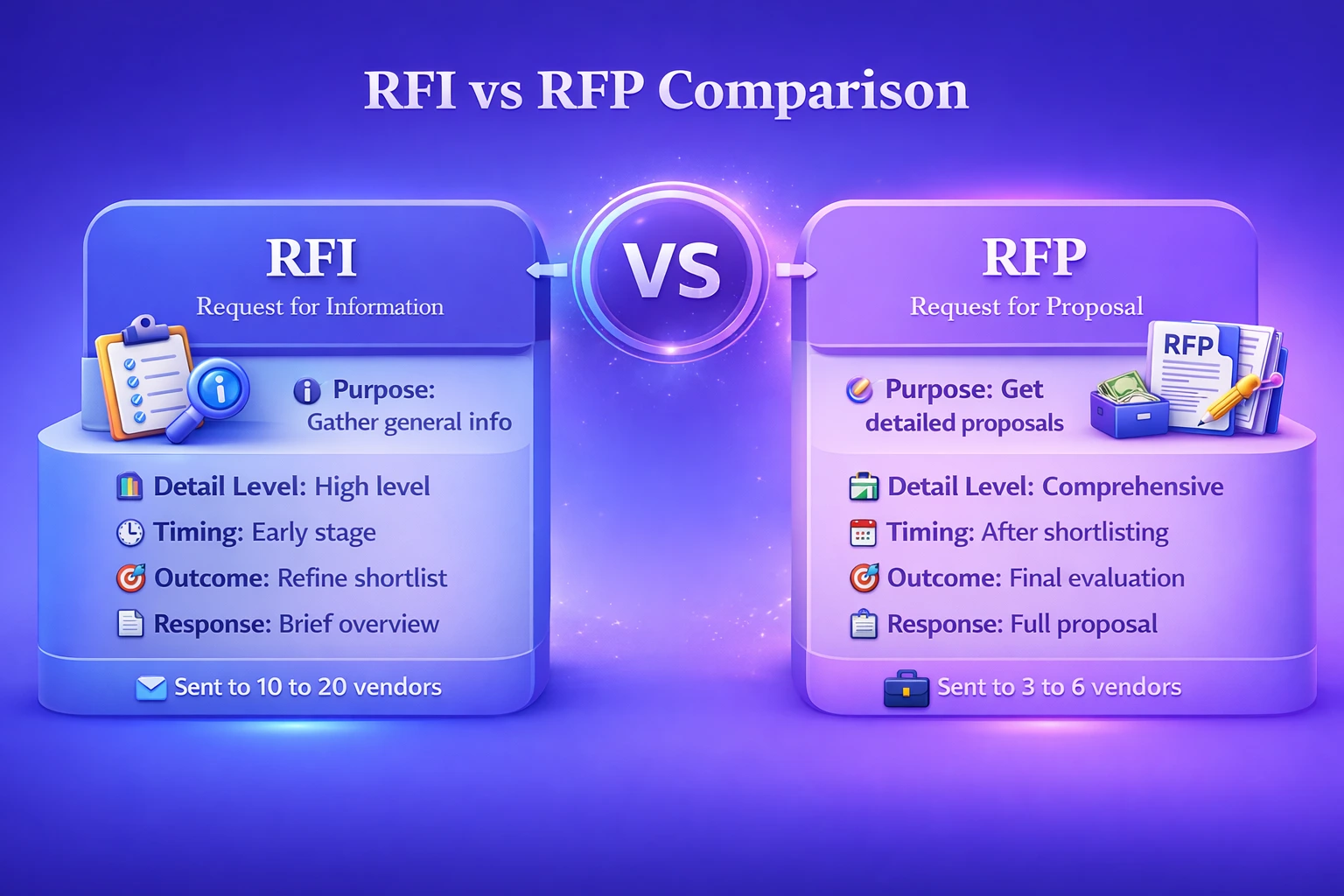

An RFI (Request for Information) is a preliminary document used to gather general information about vendor capabilities. An RFP (Request for Proposal) is more detailed and asks vendors to submit a formal solution proposal with pricing, timelines, and deliverables. RFIs help in shortlisting while RFPs help in making the final selection based on concrete deliverables.

Absolutely. Small businesses can avoid costly mistakes and wasted resources by following a structured vendor selection approach. Even a simplified framework with clear criteria, budget limits, and a comparison checklist can help smaller organizations choose the right AI platform without overspending or committing to vendors that do not align with their growth trajectory.

Data privacy is a critical factor, especially when AI platforms handle sensitive customer or business information. Organizations must ensure that vendors comply with regulations such as GDPR, HIPAA, or regional data protection laws. Failure to verify this can lead to legal penalties, reputational damage, and loss of customer trust in the long term.

Vendor performance is typically measured through Key Performance Indicators (KPIs) such as uptime, response time, resolution rate, and deliverable quality. Regular reviews, SLA compliance tracking, and periodic audits help organizations ensure the vendor consistently meets contractual obligations and supports business goals effectively over time.

Not necessarily. The lowest cost vendor may lack critical features, scalability, or adequate support. Organizations should evaluate Total Cost of Ownership (TCO) which includes implementation fees, training, maintenance, and hidden costs. A slightly more expensive vendor offering better reliability and long term value often proves to be the smarter investment overall.

Key red flags include scripted demos that avoid real time scenarios, reluctance to answer technical questions, vague timelines, and inability to provide relevant case studies. If a vendor avoids discussing integration challenges, data migration limitations, or post deployment support, it may signal gaps in their AI application delivery capabilities.

Scalability is essential, especially for growing organizations. An AI platform that works well for current needs but cannot handle increased data volumes, user loads, or feature expansion will become a bottleneck. Evaluating the vendor’s infrastructure, cloud compatibility, and ability to scale without major rearchitecture is vital during the selection process.

Yes, involving stakeholders from IT, finance, operations, legal, and end user teams ensures a well rounded evaluation. Each department brings a different perspective, from technical feasibility to budget alignment to compliance requirements. Cross functional collaboration reduces the risk of selecting a vendor that meets only one team’s needs while failing others.

If a vendor underperforms, the organization should first refer to the SLA and contractual terms to address specific breaches. Most agreements include remediation clauses, penalty provisions, or exit options. If issues persist, businesses may escalate to formal dispute resolution or begin a transition plan to onboard an alternative vendor with minimal disruption.

Reviewed & Edited By

Aman Vaths

Founder of Nadcab Labs

Aman Vaths is the Founder & CTO of Nadcab Labs, a global digital engineering company delivering enterprise-grade solutions across AI, Web3, Blockchain, Big Data, Cloud, Cybersecurity, and Modern Application Development. With deep technical leadership and product innovation experience, Aman has positioned Nadcab Labs as one of the most advanced engineering companies driving the next era of intelligent, secure, and scalable software systems. Under his leadership, Nadcab Labs has built 2,000+ global projects across sectors including fintech, banking, healthcare, real estate, logistics, gaming, manufacturing, and next-generation DePIN networks. Aman’s strength lies in architecting high-performance systems, end-to-end platform engineering, and designing enterprise solutions that operate at global scale.