Key Takeaways

- Oracle aggregator contracts collect and validate data from multiple sources, eliminating single points of failure in decentralized applications worldwide.

- Multi-source data validation through aggregation significantly reduces manipulation risks that have caused billions in DeFi protocol losses.

- Consensus mechanisms including median calculation, weighted averaging, and outlier detection ensure accurate data delivery to Oracle aggregator contracts.

- Oracle aggregators protect against flash loan attacks, price manipulation, and data feed compromises threatening DeFi protocol security.

- Cross-chain oracle aggregation enables consistent data delivery across multiple blockchain networks for interoperable decentralized applications.

- Gas optimization techniques including off-chain computation and batched updates make oracle aggregators economically viable for production protocols.

- Real-world applications span DeFi lending, derivatives pricing, insurance protocols, and supply chain verification across USA, UK, UAE, and Canada.

- Proper aggregator design requires balancing data freshness, gas costs, security guarantees, and decentralization requirements for specific use cases.

- Transparency and auditability of aggregation logic builds trust among users and enables verification of data integrity processes.

- Future oracle aggregators will incorporate AI-driven validation, zero-knowledge proofs, and enhanced cross-chain interoperability capabilities.

Understanding Oracle Aggregator Contracts in Decentralized Systems

Oracle aggregator contracts represent a critical infrastructure layer that bridges the gap between external real-world data and blockchain-based smart contract applications. These specialized Oracle aggregator contracts collect information from multiple independent oracle sources, validate the data through consensus mechanisms, and deliver a single trusted output to consuming protocols. The aggregation process fundamentally transforms how decentralized applications access and trust external information.

Our agency has spent over eight years implementing oracle solutions for clients across the USA, UK, UAE, and Canada. This experience has demonstrated that oracle aggregator contracts are essential for any serious DeFi protocol, prediction market, or data-dependent decentralized application. The difference between secure protocols and those vulnerable to exploitation often lies in their oracle architecture choices.

Understanding oracle aggregator Oracle aggregator contracts requires recognizing that blockchains cannot natively access external data. Every piece of information from price feeds to weather data must be brought on-chain through oracles. Aggregators ensure this data transfer happens securely, accurately, and with appropriate redundancy to maintain system integrity even when individual data sources fail or become compromised.

The Importance of Data Aggregation in Trustless Smart Contracts

Data aggregation forms the foundation of trustless smart contract operations that depend on external information. Without aggregation, smart contracts must either trust a single data provider or implement complex verification logic independently. Aggregator Oracle aggregator contracts centralize this verification burden, providing a standardized, audited solution that multiple protocols can leverage.

The trustless nature of aggregation comes from transparent, verifiable consensus mechanisms. Anyone can audit how data sources are weighted, how outliers are handled, and how final values are calculated. This transparency enables users to verify that the aggregation process itself does not introduce manipulation opportunities or hidden centralization risks.

Financial applications particularly benefit from robust aggregation. Lending protocols use aggregated price feeds to calculate collateralization ratios. Derivatives platforms depend on accurate settlement prices. Insurance protocols require reliable event verification. Each application demands data accuracy that only proper aggregation can provide consistently.

Decentralized Oracles vs Centralized Data Providers

Understanding the fundamental differences between decentralized oracle networks and centralized data providers helps teams select appropriate solutions for their specific requirements and risk tolerances.

| Feature | Decentralized Oracles | Centralized Providers |

|---|---|---|

| Trust Model | Distributed consensus | Single entity trust |

| Censorship Resistance | High | Low |

| Latency | Higher | Lower |

| Cost | Higher gas fees | Lower operational cost |

| Security Guarantees | Cryptoeconomic | Contractual/Legal |

Multi-Source Data Validation Through Oracle Aggregation

Effective multi-source validation requires carefully designed processes that balance accuracy, speed, and cost considerations.

Source Selection

- Independent data providers

- Geographic distribution

- Different data methodologies

- Reputation verification

Validation Process

- Timestamp verification

- Range boundary checks

- Historical deviation analysis

- Cross-source comparison

Output Delivery

- Consensus value calculation

- Confidence scoring

- Update frequency control

- Consumer notification

How Oracle Aggregators Minimize Data Manipulation Risks

Oracle aggregators employ sophisticated mechanisms to detect and prevent data manipulation attempts that could exploit dependent protocols. These defenses operate at multiple layers, from individual source validation to aggregate output verification. The goal is making manipulation economically infeasible by requiring attackers to compromise multiple independent systems simultaneously.

Time-weighted average prices (TWAP) represent one powerful anti-manipulation technique. Rather than using instantaneous spot prices, aggregators calculate averages over configurable time windows. This approach neutralizes flash loan attacks that temporarily manipulate prices within single blocks, as the attack cost increases proportionally with the required manipulation duration.

Statistical outlier detection identifies anomalous data points that deviate significantly from consensus. When one source reports prices dramatically different from others, aggregators can flag, weight down, or exclude that data entirely. This mechanism protects against both compromised oracles and data feed errors that might otherwise propagate incorrect values to consuming Oracle aggregator contracts.

Consensus Models Used by Oracle Aggregator Contracts

Different consensus models offer varying trade-offs between simplicity, manipulation resistance, and computational efficiency. Selecting the appropriate model depends on specific use case requirements and threat models.[1]

| Consensus Model | Mechanism | Best For |

|---|---|---|

| Median | Middle value from sorted inputs | Price feeds, outlier resistance |

| Weighted Average | Reputation-based weighting | Trusted source prioritization |

| Threshold Signatures | M-of-N cryptographic agreement | High-security applications |

| TWAP | Time-weighted price averaging | Flash loan resistance |

| Trimmed Mean | Average after removing extremes | Volatile data smoothing |

Handling Data Outliers and Inconsistencies in Aggregated Feeds

Outlier handling represents one of the most critical functions within oracle aggregator contracts. Data inconsistencies can arise from various sources including network delays, exchange-specific price variations, calculation errors, or deliberate manipulation attempts. Effective aggregators must distinguish between legitimate market variations and problematic data points requiring exclusion.

Statistical methods including standard deviation analysis and interquartile range calculations help identify outliers objectively. Aggregators can configure acceptable deviation thresholds based on historical volatility patterns for specific data types. Price feeds for stable assets might use tighter bounds than those for volatile cryptocurrencies or emerging market instruments.

When outliers are detected, aggregators must decide between exclusion and weighted reduction. Complete exclusion provides cleaner outputs but risks losing legitimate data during genuine market dislocations. Weighted approaches maintain broader data inclusion while reducing outlier influence. The optimal strategy depends on the consuming application’s requirements and risk tolerance.

Improving Smart Contract Execution Accuracy with Aggregated Data

Smart contract execution accuracy directly depends on input data quality. Aggregated data provides more reliable inputs than single-source alternatives, enabling Oracle aggregator contracts to make better decisions across all dependent operations. This accuracy improvement cascades through entire protocol ecosystems, benefiting all users who interact with data-dependent functions.

Lending protocols demonstrate aggregation benefits clearly. Accurate collateral valuations prevent both unnecessary liquidations that harm borrowers and under-collateralized positions that create systemic risks. The precision provided by aggregated price feeds enables protocols to offer more competitive loan-to-value ratios while maintaining appropriate safety margins.

Derivatives and options protocols particularly benefit from execution accuracy. Settlement prices must reflect true market conditions to ensure fair outcomes for all participants. Aggregated data provides the confidence necessary for these high-stakes applications where small pricing errors can result in significant financial consequences for traders.

Role of Oracle Aggregators in Mitigating Oracle-Based Attacks

Oracle-based attacks have caused substantial losses across DeFi protocols, making aggregator-based mitigation essential for production deployments. These attacks exploit weaknesses in how protocols obtain and trust external data, manipulating prices to extract value through exploited liquidations, arbitrage opportunities, or direct theft mechanisms.

Flash loan attacks represent the most common oracle exploitation vector. Attackers borrow large amounts without collateral, manipulate spot prices on low-liquidity exchanges, trigger profitable contract interactions based on manipulated prices, and repay loans within single transactions. Aggregators counter these attacks through time-weighted pricing and multi-source validation that cannot be manipulated within single blocks.

Front-running attacks exploit knowledge of pending oracle updates to position trades profitably. Aggregators mitigate this through unpredictable update timing, commit-reveal schemes, and threshold signature requirements that prevent any single party from knowing final values before publication.

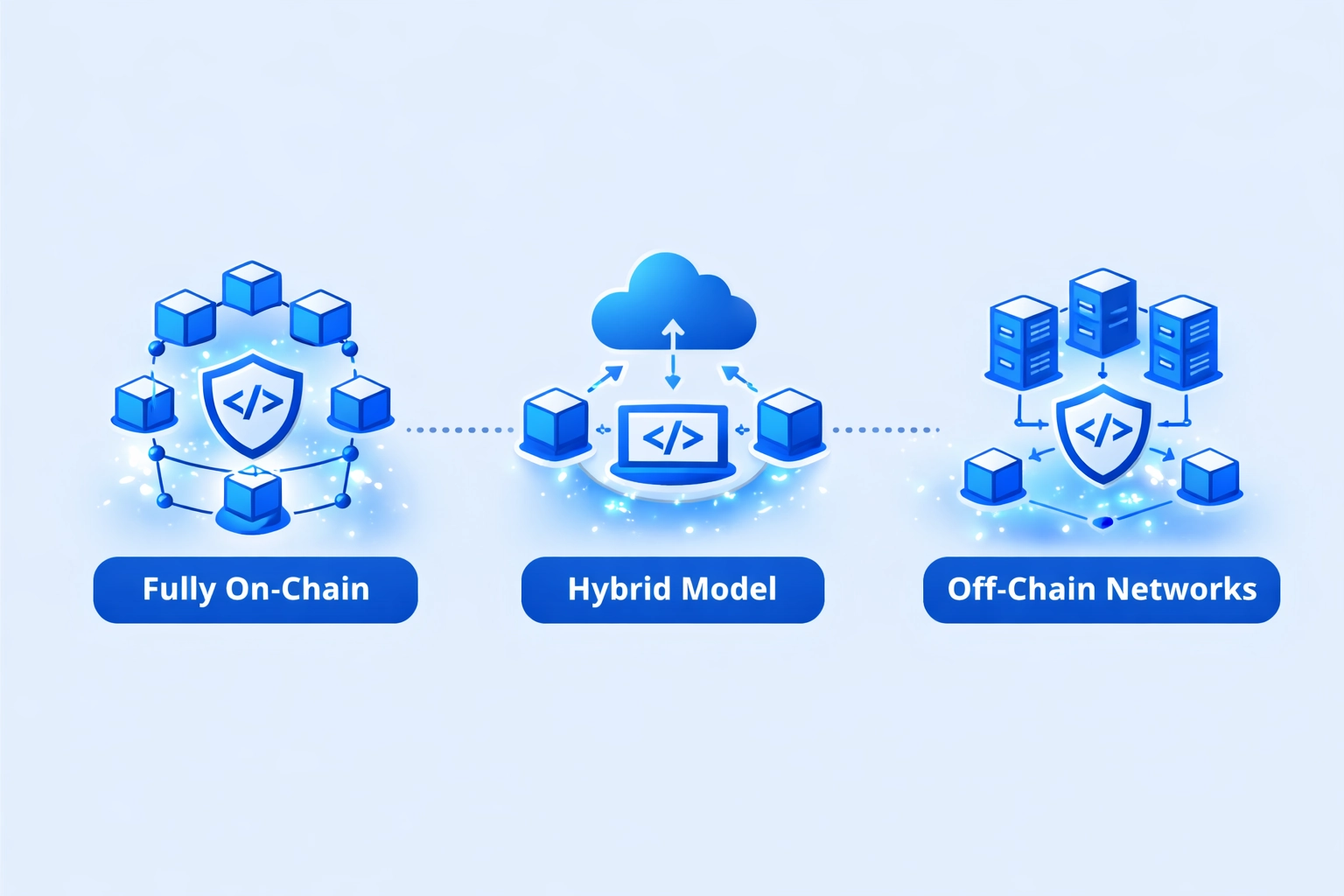

On-Chain and Off-Chain Oracle Aggregation Architectures

Selecting between on-chain and off-chain aggregation architectures requires understanding the trade-offs each approach presents.

Fully On-Chain

All aggregation logic executes on blockchain. Maximum transparency and verifiability but higher gas costs and latency constraints.

Hybrid Model

Off-chain computation with on-chain verification. Balances efficiency with security through cryptographic proofs of correct aggregation.

Off-Chain Networks

Dedicated oracle networks handle aggregation entirely off-chain. Lowest costs but requires trust in network operators.

Gas Efficiency and Performance Considerations in Oracle Aggregators

Gas optimization significantly impacts oracle aggregator viability for production deployments. Poorly optimized aggregators can make dependent protocols economically uncompetitive, driving users toward less secure alternatives with lower costs.

| Optimization | Gas Savings | Trade-offs |

|---|---|---|

| Batched Updates | 40-60% | Increased latency |

| Packed Storage | 30-50% | Code complexity |

| Off-Chain Computation | 60-80% | Trust assumptions |

| Delta Updates | 20-40% | State management |

| Merkle Proofs | 50-70% | Verification overhead |

Real-World Use Cases of Oracle Aggregator Contracts

DeFi Lending Protocols

Aggregated price feeds enable accurate collateral valuations for lending platforms serving users across global markets.

Derivatives Trading

Options and futures platforms require precise settlement prices that aggregators provide through consensus mechanisms.

Insurance Protocols

Parametric insurance products use aggregated weather, flight, and event data to trigger automatic claim payouts.

Stablecoin Systems

Algorithmic stablecoins depend on accurate price feeds to maintain pegs through collateral rebalancing operations.

Prediction Markets

Event outcome verification requires trusted data sources that aggregators provide for fair market resolution.

Supply Chain Tracking

IoT sensor data aggregation enables verifiable supply chain transparency for enterprise blockchain applications.

Gaming and NFTs

Random number generation and external game state verification power blockchain gaming experiences.

Real Estate Tokenization

Property valuation feeds support tokenized real estate platforms operating in Dubai, London, and Toronto markets.

Oracle Aggregators in Cross-Chain and Multi-Network Systems

Cross-chain oracle aggregation addresses the challenge of maintaining data consistency across multiple blockchain networks. As DeFi expands to Layer 2 solutions and alternative chains, applications increasingly require identical data availability regardless of which network users interact with. Aggregators designed for cross-chain operation ensure this consistency.

Bridge protocols particularly depend on cross-chain oracles for secure asset transfers. Verifying source chain state on destination chains requires trusted data that aggregators provide. The security of billions in bridged assets depends on oracle reliability across network boundaries.

Multi-network deployment introduces latency and finality considerations that single-chain aggregators avoid. Different chains have varying block times and confirmation requirements. Cross-chain aggregators must account for these differences while maintaining data freshness standards that consuming applications expect.

Ensuring Transparency and Data Integrity with Oracle Aggregation

Transparency distinguishes decentralized oracle aggregators from traditional data providers. Every data source, weighting algorithm, and consensus calculation can be verified on-chain or through published specifications. This openness enables users to understand exactly how their applications receive external data and assess associated risks independently.

Data integrity verification extends beyond simple accuracy checks. Aggregators must prove that reported values accurately represent source inputs without manipulation during aggregation. Cryptographic proofs, multi-signature requirements, and audit trails provide this verification layer that traditional data providers cannot match.

Historical data availability supports protocol governance and dispute resolution. When questions arise about past oracle values, aggregators with comprehensive archives enable verification of historical accuracy. This accountability mechanism builds long-term trust that supports protocol growth across institutional markets.

Best Practices for Designing Oracle Aggregator Contracts

Follow these essential guidelines to create robust oracle aggregator implementations.

Source Diversity

- Multiple independent providers

- Geographic distribution

- Different methodologies

Security Measures

- Outlier detection enabled

- Time-weighted calculations

- Deviation thresholds set

Operational Excellence

- Regular monitoring active

- Incident response planned

- Update mechanisms tested

Documentation

- Source specifications published

- Consensus logic documented

- Security audits completed

Limitations and Challenges of Oracle Aggregator Models

Despite significant advantages, oracle aggregators face inherent limitations that teams must understand when selecting data infrastructure. Latency increases compared to single-source alternatives because aggregation requires collecting and processing data from multiple providers. For applications requiring sub-second price updates, this latency can create competitive disadvantages.

Cost considerations extend beyond gas fees to include oracle network subscription costs, infrastructure maintenance, and monitoring requirements. Small protocols may find comprehensive aggregation economically challenging, creating pressure to compromise on data quality through fewer sources or less frequent updates.

Complexity in aggregator design can introduce bugs that single-source solutions avoid. Each additional component creates potential failure points. Teams must balance security benefits against implementation risks, ensuring that aggregation logic itself does not become a vulnerability through coding errors or design flaws.

The Future of Oracle Aggregator Contracts in Decentralized Ecosystems

Emerging technologies and evolving requirements are shaping next-generation oracle aggregator capabilities.

Trend 1: AI-powered validation will detect manipulation patterns that statistical methods miss through machine learning analysis.

Trend 2: Zero-knowledge proofs will enable private aggregation where sources remain confidential while outputs are verifiable.

Trend 3: Native cross-chain protocols will eliminate bridge dependencies for multi-network data consistency.

Trend 4: Modular aggregator architectures will allow protocols to customize consensus mechanisms for specific use cases.

Trend 5: Real-world asset integration will expand aggregator data types beyond crypto to traditional financial instruments.

Trend 6: Decentralized identity integration will enhance source reputation tracking through verifiable credential systems.

Trend 7: Automated market making for data will create competitive oracle markets with price discovery mechanisms.

Trend 8: Regulatory compliance features will support institutional adoption requirements across USA, UK, UAE, and Canada.

Build Secure Oracle Infrastructure with Blockchain Experts!

Transform your DeFi protocol with reliable oracle aggregator contracts. Our team delivers battle-tested solutions for enterprises across USA, UK, UAE, and Canada markets.

Frequently Asked Questions

Oracle aggregator contracts collect data from multiple oracle sources and combine them into a single reliable output for smart contracts. They eliminate single points of failure by validating information across various providers. This multi-source approach significantly enhances data accuracy and trustworthiness for decentralized applications operating across global blockchain networks.

Oracle aggregators enhance security by cross-referencing data from multiple independent sources before delivering it on-chain. This validation process detects and filters manipulated or erroneous data points. By requiring consensus among multiple oracles, aggregators prevent malicious actors from exploiting single-source vulnerabilities that could compromise DeFi protocols and applications.

Single oracles rely on one data source, creating vulnerability to manipulation, downtime, and inaccuracies. Oracle aggregators pull data from numerous independent providers, apply consensus mechanisms, and deliver verified results. This distributed approach offers superior reliability, fault tolerance, and resistance to attacks compared to centralized single-source oracle solutions.

Oracle aggregators employ various consensus models including median calculation, weighted averaging, threshold signatures, and outlier detection algorithms. Some use reputation-based weighting where reliable sources receive higher influence. Advanced aggregators combine multiple methods, selecting approaches based on data type, latency requirements, and security needs for specific use cases.

Yes, modern oracle aggregators support cross-chain functionality, delivering consistent data across multiple blockchain networks simultaneously. This interoperability enables decentralized applications to maintain data consistency whether operating on Ethereum, Polygon, Avalanche, or other chains. Cross-chain aggregators are essential for multi-network DeFi protocols and bridging solutions.

Oracle aggregator gas costs depend on the number of sources queried, consensus complexity, and storage requirements. While aggregators consume more gas than single oracles, optimizations like off-chain computation with on-chain verification reduce expenses significantly. Many aggregators batch updates and use efficient data structures to minimize transaction costs.

Oracle aggregators detect manipulation through statistical analysis, outlier rejection, and source reputation tracking. When data from one source deviates significantly from consensus, the aggregator flags or excludes it. Time-weighted averaging and historical comparison help identify sudden price spikes or flash loan attacks attempting to exploit oracle-dependent protocols.

Reviewed & Edited By

Aman Vaths

Founder of Nadcab Labs

Aman Vaths is the Founder & CTO of Nadcab Labs, a global digital engineering company delivering enterprise-grade solutions across AI, Web3, Blockchain, Big Data, Cloud, Cybersecurity, and Modern Application Development. With deep technical leadership and product innovation experience, Aman has positioned Nadcab Labs as one of the most advanced engineering companies driving the next era of intelligent, secure, and scalable software systems. Under his leadership, Nadcab Labs has built 2,000+ global projects across sectors including fintech, banking, healthcare, real estate, logistics, gaming, manufacturing, and next-generation DePIN networks. Aman’s strength lies in architecting high-performance systems, end-to-end platform engineering, and designing enterprise solutions that operate at global scale.